Is PostgreSQL/PostGIS suitable for Big Data? This is a question I have been asking myself for a while, and I now have the opportunity to test it with a PostgreSQL instance from Amazon Web Services (AWS); after all, if such a service cannot deal with “Big Data”, who can?

I have a dataset with Tweets in the city of Barcelona, over a time period. The entire dataset amounts to about 3 million points (82 MB), and I have some subsets: a smaller collection of about 2 million points (55 MB) and 300 000 points (8.2. MB).

We can argue whether this dataset is, or not, Big Data; but IMHO, a table with more than 1 million records starts to get “heavy” for any relational database. Now before doing any computations of spatial algorithms (e.g.: convex hull, point in polygon), the very first step is to actually load this dataset into the database. And this is where I started to struggle.

QGIS has a plugin called SPIT, that allows to import a Shapefile into PostGIS. Unfortunately it seems to be extremely slow any of the three files that I mentioned above (including the smaller one).

Switching to the command line, where I have more control knowledge about the processes that are running, I tried shp2pgsql, a tool for importing Shapefiles that comes with the PostGIS installation.

On Linux it is possible to convert and import the Shapefile in one go, redirecting the output with pipe:

shp2pgsql -s <SRID> -c -D -I <path to shapefile> <schema>.<table> | \

psql -d <databasename> -h <hostname> -U <username>

Since this command was really slow (unresponsive?) I decided to do it in two steps, to see where it was hanging:

- Create an sql file with the insert statements.

- Load this sql file into the database.

Unsurprisingly, the SQL file with the statements was generated quite quickly, and the processing effort was being spent on the insert queries. This situation was verified with the three Shapefiles, even when I inserted a spatial index (Gist). After thinking a bit, I came to the conclusion that the spatial index should be created within the “CREATE TABLE” statement, so it should actually speed up the queries a bit (but I did not confirm this guess).

My next attempt, was to use ogr2ogr, the GDAL tool to convert between vector formats.

ogr2ogr -f "PostgreSQL" PG:"host=host user=user dbname=db password=pass" data.shp

I have to say that I read some suggestion to prefer shp2pgsql over this tool, and in fact it was quite slow as well (at this point I was only testing the smallest of the Shapefiles).

My conclusion up to this moment, is that it is quite expensive in terms of time (and processor) to import a large dataset into a PostGIS. Some ideas that could be explored:

- Upgrade the PostGIS server (would scale vertically make any difference?).

- Upload the data into the Amazon server and run the import tool from there. I am not sure whether it is possible to do that, but it seems like a good improvement to do this operation locally, and using the power of the Amazon Server, rather than my desktop computer.

- Split the Shapefile into chunks of data. I am not entirely sure how to do this, and any suggestions would be welcome.

Next steps: once I manage to import a large Shapefile into my PostGIS instance, I will post some feedback about the computation of spatial operations.

Update: Apparently I underestimated the fact that I am importing data over a network connection, with a limited latency. The best thing would be to make the import locally, an option that I unfortunately don’t have, since the Amazon RDS does not give me SSH access (it is a service, not a machine!). I decided to switch to a new approach: import a plain csv file into a database table, using the /copy command, and that was actually really fast (a few seconds).

psql database -U user -h host.eu-west-1.rds.amazonaws.com -c "\copy newt_table from 'data.csv' with DELIMITER ','"

Of course, I then had to run a query populate the point geometry from the float (x,y) values, and create an update a spatial index (for a matter efficiency it is recommended that indexes are created after, and not during the import!).

UPDATE new_table SET geom = ST_SetSRID(ST_MakePoint(lon,lat),4326);

CREATE INDEX [indexname] ON [tablename] USING GIST ( [geometryfield] );

VACUUM ANALYZE [table_name] [column_name];

That was still considerably fast (although not as much as the csv import!), and my guess is: it is because I are now running queries on the remote machine (and not uploading data via an internet connection).

Following some very usefull on Stack Overflow, I decided to retry my previous approaches, tuning the configuration parameters.

First I tried the OGR command line tool, but this time with the config flag “PG_USE_COPY YES”, to force dumping of the data:

ogr2ogr -progress -f PostgreSQL PG:"dbname='database' user='user' host='host.eu-west-1.rds.amazonaws.com' port='5432' data.shp --config PG_USE_COPY YES -nlt POINT -nln import_ogr

Although speed has improved massively, it still took me 1:03:00 to run this query.

Then I tried the shp2pgsql tool, with the “-D” flag, which also forces the dump of data:

shp2pgsql -D -s 4326 data.shp import_shp2pgsql | psql

This was remarkably fast: 00:01:04

Comparing the two imports, we can see that shp2pgsql did not create any index (which we already commented is a costly operation), while ogr2ogr created a gist index on the spatial field. The extra step of creating an index, has to be added to the shp2pgsql import, but as we have seen before, that operation is not a problem since we are then operating on the server.

I conclude this review, stating that shp2pgsql has a great performance in the task of loading a Big Data spatial table into a remote PostGIS server (e.g.: Amazon RDS). It is recommended that the “-D” flag is passed to the tool, and that no indexes are created during this operation.

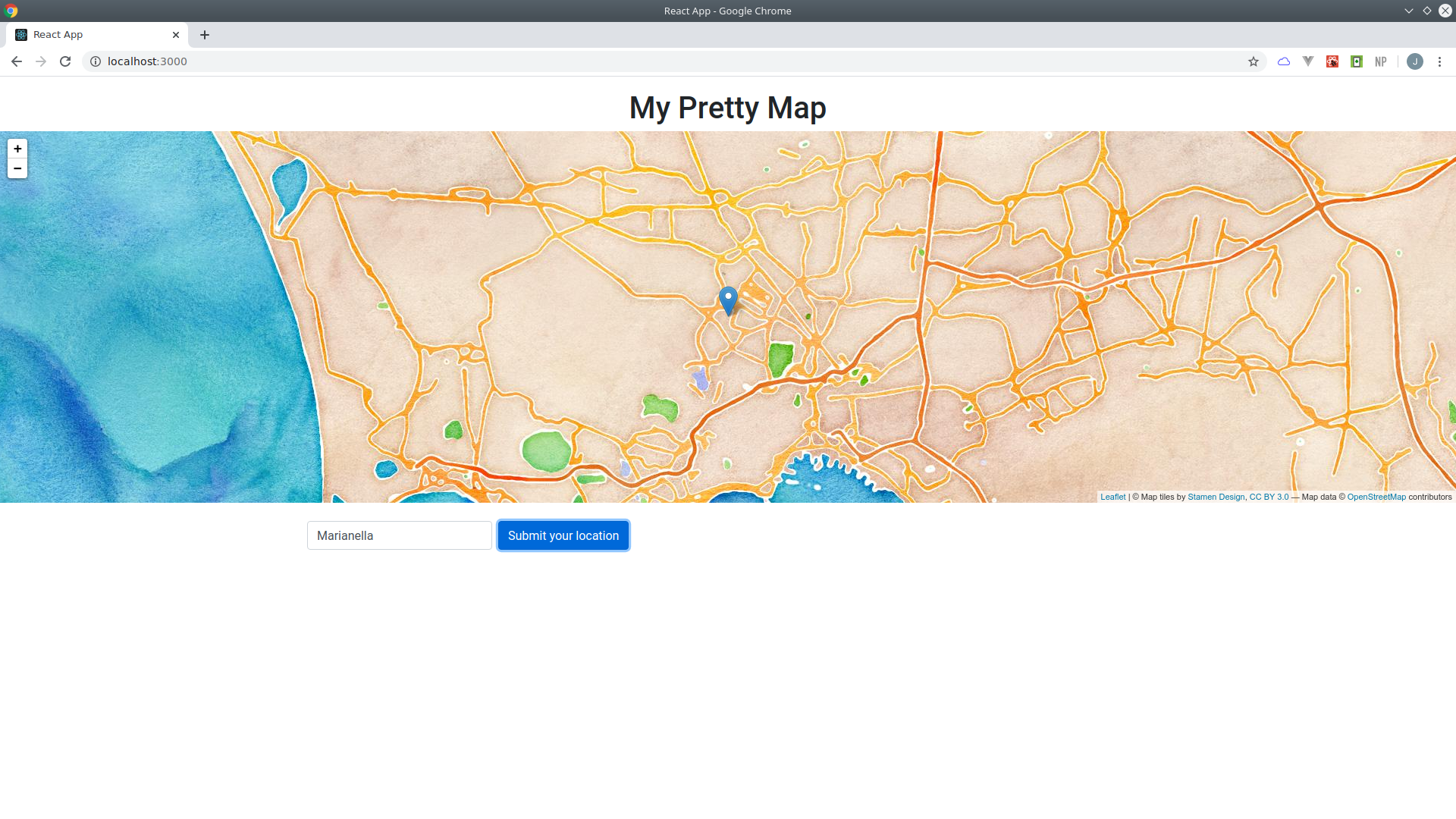

Bellow we can see the PostGIS geometry of the imported table, with more than 3 million points: